In an earlier post I briefly touched on the subject of ingest performance and some of the tricks that Mitopia® uses to improve these ingest metrics in a distributed server context. In today’s post I want to take this discussion a little further, to discuss some of the problems with the metrics commonly used to measure performance, and propose some new metrics (used within Mitopia®) that are better able to capture the true searchable utility of whatever was ingested, and thus create a metric that actually measures something useful – a thing sorely lacking with current measures.

In an earlier post I briefly touched on the subject of ingest performance and some of the tricks that Mitopia® uses to improve these ingest metrics in a distributed server context. In today’s post I want to take this discussion a little further, to discuss some of the problems with the metrics commonly used to measure performance, and propose some new metrics (used within Mitopia®) that are better able to capture the true searchable utility of whatever was ingested, and thus create a metric that actually measures something useful – a thing sorely lacking with current measures.

Currently there are essentially three metrics (and countless variants thereof) used to measure ingest performance for databases, they are:

- Bytes/s – This measures the number of bytes per second of data of some kind that can be ingested into a database. This is clearly a truly silly and meaningless metric because we must ask what ‘ingest’ actually means here. Does this mean that you can search on each and every word ingested? How about sequences of words? What is the relationship between words and bytes (non-English text may require from 1-6 bytes per character, and how many characters make up a word is all over the map)? Is the search of words exact or stemmed? Can you search across languages? The list goes on. Clearly this is by far the easiest measure to come up with since one need only total the size of all files fed in, however as a real world metric for comparison across systems, this measure is virtually useless.

- Documents or Records/s – Again this is an easy measure to compute but is suffers from all the uncertainly of the Bytes/s measure, plus a whole load more such as: How big is each document (or record)? Is the ingested item structured and searchable by meta-data, word content, or what? For database records, we must ask a whole new set of questions about the resultant query operations possible on the fields, what operators (numeric, text, etc.) are supported, Boolean logic between fields, range constraints, etc. All these additional capabilities are critical to measuring real utility of the ingest, and yet none are touched by this metric.

- Entities/s – This specialized metric is measures the number of recognized entity names (e.g., people, organizations, products, etc.) identified in a block of text that is being ingested. The concept of an ‘entity’, as opposed to records or documents, suggests a move to an ontological knowledge-level organization, however this is rarely the case, and ultimately we are talking about specialized records in a relational database. Once again, we must ask what is an ‘entity’?,exactly what kinds of information is recorded for an entity?, how extensive is our ability to search relationships between entities?, etc.

The results of the shortcomings is that while each metric measures something, none of them is really any use to parameterize what has actually been accomplished at the end of the ingest and how useful the resultant searchable data set actually is. This problem is aggravated manyfold when one moves to a knowledge level (KL) system where the focus is largely on the nature of the many diverse relationships (both direct and indirect) that may exist between extracted ontological content and how those relationships might influence the interpretation of meaning in textual documents that reference known entities (or otherwise).

In order to overcome these problems and come up with meaningful KL metrics, it became necessary to define two new units the DRIP and the TAP (note the plumbing/data-flow metaphor!) for measuring the complexity of the information/knowledge extracted during a mining run for example.

The first unit is the DRIP (Data Relation Interconnect Performance) defined as (E) + (R) where E is the total number of ontologically distinct (as opposed to the RDBMS table metaphor) entities OR data items ingested, and R is the number of explicit directed relationships created between them. Because relationships can be unidirectional or bi-directional (more useful), a unidirectional connection (e.g. a person’s citizenship) counts as an R score of one, while a bidirectional connection (e.g. an employment record) counts as two, one in each direction. The sum of the number of entities and the number of connections between them accurately measures the usefulness of what has been ingested for systems interested in relationships (presumably all KL systems). Entity ingest rate is measured in DRIP/s or DRIPS. The DRIP measure however, fails to take into account Mitopia’s ability to dynamically hyperlink on a per client machine basis, at any time, between all textual data occurring within any record and every other item ever ingested. This ‘lazy’ creation of connections allows each system user far greater flexibility in his analysis, and avoids freezing the data according to a particular focus or analytical technique during ingestion. It is unlikely any other system can approach Mitopia’s current DRIP rate, and they certainly can’t provide this feature, so we ignore this vast potential contribution to the DRIP measurement for comparative purposes.

The second unit is the TAP (Text Acquisition Performance) defined as (T) where T is the total number of ingested and indexed words (not characters – regardless of language). By indexed we mean separately searchable on any and all text fields such that the search time (on a single-server machine) does not exceed 1 minute when searching for all occurrences of any single word in any textual field of a data set where T is at least 10 million. This is an arbitrary cutoff criteria, however due to the vastly different search strategies used by various systems, it is hard to think of a limit that would be deemed fair by all. In theory, because Mitopia® allows three (two for English) distinct search protocols that easily meet this criteria for every indexed word (native language un-stemmed, native stemmed, and root-mapped to English stemmed — see earlier post for details) our ingest rates should count as at least 2-3T but once again we ignore this. Textual ingest rate is measured in TAP/s or TAPS.

By measuring the complexity of the output collection(s) from a mining run and dividing by the number of seconds taken for the mining run to complete, one can easily obtain meaningful ingest rates for various different sources. Some sources are more text intensive and so exhibit higher TAPS ratings, others are more ‘connection’ intensive and exhibit higher DRIPS ratings. As a relatively balanced example, on a 2006 era Mac Pro quad-core (3 GHz) machine, hard drives only, doing end-to-end mining and persisting into servers (for subsequent search) of a standard data set containing around 25 different types of source, performance (using the “MitoPlex – Dump Storage DRIP/TAP measures” tool in Admin. window tool) was as follows:

- Total cores time for mining phase: 4158s (average of 1,040 s in each core)

- All server activity quiescent after: 30 minutes (1800 s)

- Total textual data ingested (excluding image files): 278 MB

- Mining Rate (4 cores): 267 KB/s

- Total end-to-end (4 cores): 154 KB/s

- Total Server Complexity: 791.5 KDRIP, 5.27 MTAP

- End-to-end persist rate: 0.44 KDRIP/s, 293 KTAP/s

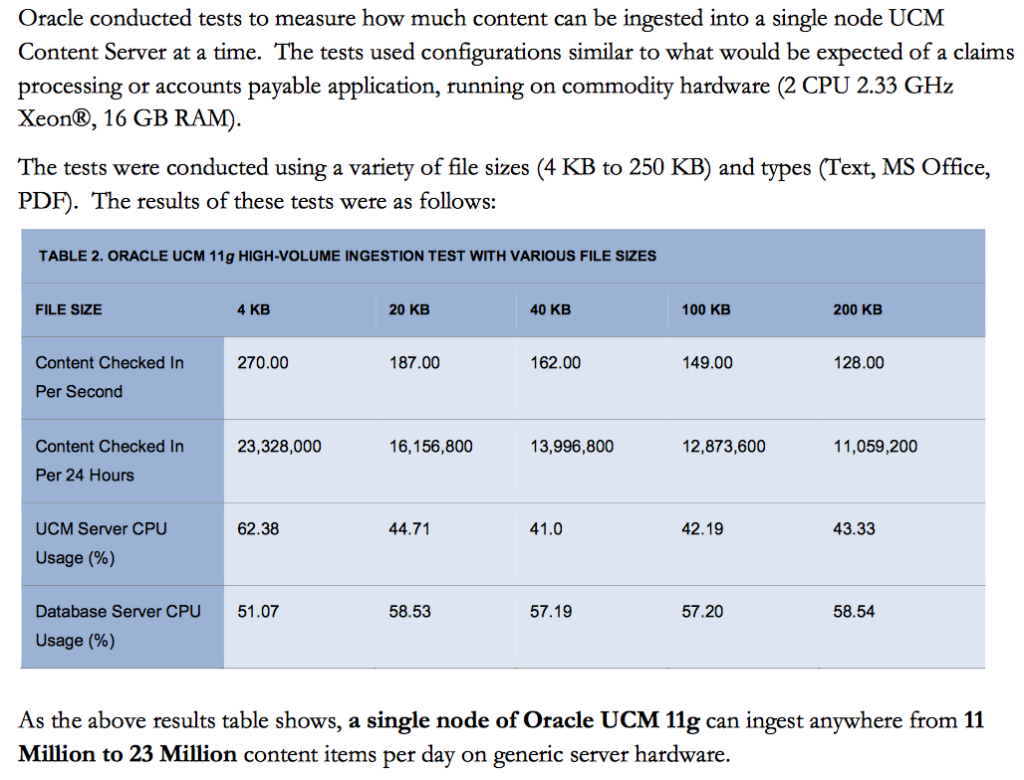

As stated above, nobody else quotes such metrics, and indeed without a KL underpinning (which only Mitopia® actually has), a DRIP measure for a relational database system even by the most generous interpretation, would be paltry. Relational databases, despite the name, are notoriously bad at representing massive and changing relationships between things. However, we can attempt to compute a rough estimate for the very simple TAPS measure from published benchmarks available on the web. For example, Oracle Corporation in a 2010 White Paper on the Oracle 11g product, presents the following benchmark for a full text search ingestion application:

From the above we can see that the 23 million 4K document/day measure (the highest performance), assuming that the documents were in English, and that the average number of letters in an English word is 5 (plus one space between each word), we see that the equivalent TAPS value would be: 23,328,000*4/24/60/60/6 = 180 KTAP/s. This is of the same order of magnitude as the Mitopia® result (293 KTAP/s), although the actual range of the text searches available is in truth orders of magnitude higher in Mitopia® when one includes things like phrase searches etc. After all, just as important as ingest rate (if not more so), is query performance on the ingested data, and in this realm the gap between existing systems and Mitopia® only grows larger.

From the above we can see that the 23 million 4K document/day measure (the highest performance), assuming that the documents were in English, and that the average number of letters in an English word is 5 (plus one space between each word), we see that the equivalent TAPS value would be: 23,328,000*4/24/60/60/6 = 180 KTAP/s. This is of the same order of magnitude as the Mitopia® result (293 KTAP/s), although the actual range of the text searches available is in truth orders of magnitude higher in Mitopia® when one includes things like phrase searches etc. After all, just as important as ingest rate (if not more so), is query performance on the ingested data, and in this realm the gap between existing systems and Mitopia® only grows larger.

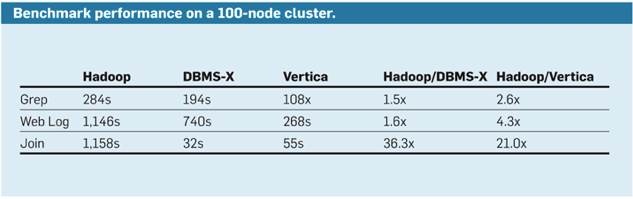

Of course these rates can both be scaled, and the scaleability as a function of the CPU and other resources required is another critical issue. This issue as well as the far more powerful search mechanisms available within Mitopia® and their effect on true searchable utility (which even the DRIP and TAP cannot fully measure) is fully addressed in the ‘MitopiaNoSQL’ video which compares Mitopia® real world search utility, cost, and other metrics with that attainable through both relational and NoSQL systems. The results show that no existing system can come close to Mitopia’s performance in a scaled system.

Of course these rates can both be scaled, and the scaleability as a function of the CPU and other resources required is another critical issue. This issue as well as the far more powerful search mechanisms available within Mitopia® and their effect on true searchable utility (which even the DRIP and TAP cannot fully measure) is fully addressed in the ‘MitopiaNoSQL’ video which compares Mitopia® real world search utility, cost, and other metrics with that attainable through both relational and NoSQL systems. The results show that no existing system can come close to Mitopia’s performance in a scaled system.