In the previous post we discussed coding standards, and in this one we will examine the equally important subject of module/unit tests, and in particular the built-in module testing framework that Mitopia® provides, and which MitoSystems’ coding standards mandate. A distinction is generally made between ‘unit tests’ and ‘module tests’, the former is normally a development phase activity performed by the developer on a per-function basis while the latter is normally though of as something that happens after initial development and may be repeated regularly to ensure the module still performs as expected during maintenance. Module tests tend to test operation of an integrated set of functions more than unit tests do. In either case, these tests tend to involve running the target code within some kind of specialized testing harness which is quite distinct from the actual program the code is designed to be part of.

In the previous post we discussed coding standards, and in this one we will examine the equally important subject of module/unit tests, and in particular the built-in module testing framework that Mitopia® provides, and which MitoSystems’ coding standards mandate. A distinction is generally made between ‘unit tests’ and ‘module tests’, the former is normally a development phase activity performed by the developer on a per-function basis while the latter is normally though of as something that happens after initial development and may be repeated regularly to ensure the module still performs as expected during maintenance. Module tests tend to test operation of an integrated set of functions more than unit tests do. In either case, these tests tend to involve running the target code within some kind of specialized testing harness which is quite distinct from the actual program the code is designed to be part of.

In Mitopia® we take the position that unit tests and module tests are one and the same, and that rather than being distinct from the target application, they are always part of it, and can in fact be invoked at any time from within the Mitopia® application itself. This is a fairly radical departure from normal approaches, and so perhaps we should start out by describing the reasoning behind this choice of approach.

The following are some key advantages of having module tests available in-place in the target application:

- If the tests run within the actual application environment, they better represent what will happen in the real thing. Isolated test harnesses tend to simplify things such that test results may not reflect real operation in place.

- By running within Mitopia®, test programs have access to all the API calls and all the debugging facilities that are built in. This also obviates the need to build custom harnesses which might otherwise discourage module test development. Indeed by having the module test in the same source file, it becomes perhaps the most expressive form of documentation as to how particular functions are invoked and should operate. This is critical to someone trying to debug in an unfamiliar module since they can simply execute the module test and step through operation of the functions they are interested in without having to figure out how to create such a single stepping opportunity another way within the real system. The result is that there is less temptation to put a ‘temporary’ change into the system in order to investigate something, and then forget to take it out. In a large system under active development, entropy from forgotten test statements (or temporary commenting out) can be one of the largest sources of new bugs. The built-in module test reduces this effect. As an aside, Mitopia® coding standards also mandate that any temporary code change made for testing purposes MUST be surrounded by an #ifdef TEMPORARY conditional compile block. The value TEMPORARY is always defined (for development builds), however the rigor of always adding the #ifdef TEMPORARY makes such things trivial to find later if distracted, and has no doubt saved man months over the project lifetime.

- Mitopia® coding standards mandate that the module test code be in the same source file as the module itself which means module tests are far more likely to be maintained and used that would otherwise be the case. They also change as the code/API changes and thus help to document the consequences of the change.

- The ability to run a module test at any time within the actual target environment being debugged as a whole is an invaluable comprehension and fault isolation aide and it removes all questions of if the same code involved in the module test is running in the real system.

- As with all things, the Mitopia® philosophy is that everything one might ever need be part of the application itself, even if not normally executed. This applies to module tests just as it does to help tools, code analysis, utilities, and all other things that need to ‘follow the application or development environment’. Build them in so they don’t get lost over time.

- Being in the same source file and standardized (see below), the unit/module test becomes the quickest way for the developer of a new package/abstraction to test any new functionality they have written even before the abstraction is complete. This then becomes how they initially test their code, and as the abstraction fills out, they add additional test steps at higher and higher levels of functionality and integration. The end result is that the first few test steps in a module test sequence tend to be closer to what one might expect from a unit test, while later and later steps transition to an integrated module test. The final module test is thus a combo of a unit test and a module test, and is so useful for developer testing that all new features are automatically added to the test suite, as that is simply the easiest thing to do to get them working. In the end, the module test is a very effective detector for anything that might be broken by a maintenance change. Even many years down the line, it is simpler to add some new edge case found in the field to the module test and find/fix the bug that way, than it is to recreate the necessary conditions in the real system. Moreover, being in the same source file gives access to static routines also and so avoids having to publish things that shouldn’t be simply to support testability.

- The whole trick is to make the module test and its maintenance easier to use for the developer than any other way they might try to step through their code. By doing that, one guarantees good module tests and that in turn guarantees robust and reliable code. Developers are always in a hurry, so if they think something else will be quicker than adding an additional module test step to test new functionality, they’ll usually do that instead. So we have to make this incredibly easy to code, and use, more so than any other approach they might try.

Mitopia’s Module Test Suite

The basic concept behind Mitopia’s module test approach is that every test step should convert the results of code execution to some form of human readable string, and that by defining an ‘expected’ value for this string and comparing it to the actual ‘achieved’ value, all test steps can be reduced to a standardized form and the entire test step registration and execution process can be formalized and controlled within Mitopia® so that all test steps remain available at all times. If the two string match, the step passes, if they don’t match, the framework can print out the expected and achieved, and the tester can easily see where any why they don’t match. Now the first time someone unfamiliar with this approach hears this strategy, they invariable say well … not everything can be converted to a string like that, what about if its a comms. thingy, multi-threaded, causes errors, or perhaps is user interface stuff, how do you convert that to a string? My answer is that: all of these kinds of things are module tested within Mitopia®, and we have found ways to convert all of them to meaningful strings. It is really very simple. So, given that premise, lets go ahead and describe the basic structure of a module test and the API’s one uses to register and execute it:

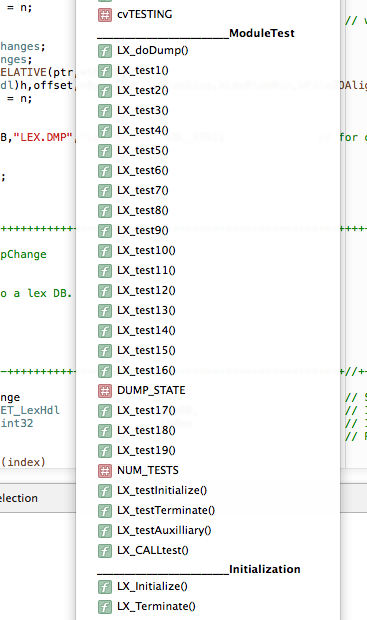

The screen shot to the left shows the final portion of the function popup within the development environment for the Lex.c package. Mitopia® coding standards dictate that every module have an initialization and termination function which must be named XX_Initialize() and XX_Terminate() where XX_ is the package prefix, it also mandates that these functions be at the end of the source file. Part of the reasoning for this is that this allows the initialization function to ‘see’ and register the entirely ‘static’ module test – which the coding standards dictate should appear immediately above the Initialization section as shown in the screen shot.

The screen shot to the left shows the final portion of the function popup within the development environment for the Lex.c package. Mitopia® coding standards dictate that every module have an initialization and termination function which must be named XX_Initialize() and XX_Terminate() where XX_ is the package prefix, it also mandates that these functions be at the end of the source file. Part of the reasoning for this is that this allows the initialization function to ‘see’ and register the entirely ‘static’ module test – which the coding standards dictate should appear immediately above the Initialization section as shown in the screen shot.

We can see from the screen shot that for the Lex package, the module test comprises 19 distinct test steps.

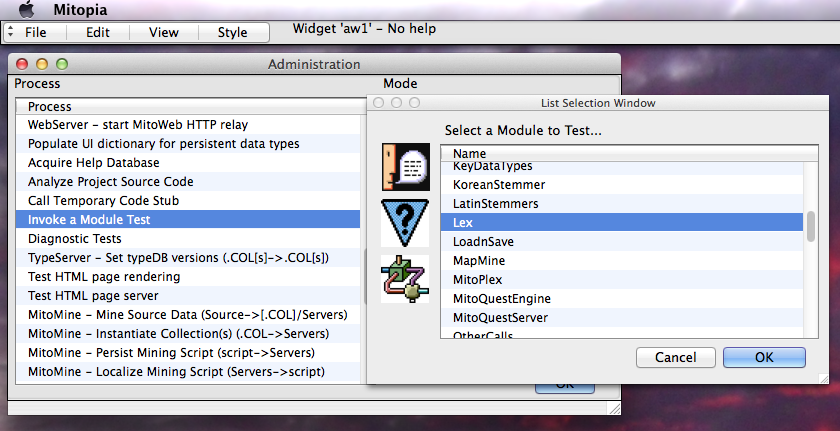

![]() The module test itself is registered with the suite by calling DG_RegisterModule() as illustrated above. The function LX_CALLtest() provides the interface to the standard module test suite, and the second string parameter is the module name. This registration allows the module test to be run at any time from the built in Administration window simply by choosing “Invoke a Module Test” and then picking the name of the module to be tested as illustrated in the screen shot below:

The module test itself is registered with the suite by calling DG_RegisterModule() as illustrated above. The function LX_CALLtest() provides the interface to the standard module test suite, and the second string parameter is the module name. This registration allows the module test to be run at any time from the built in Administration window simply by choosing “Invoke a Module Test” and then picking the name of the module to be tested as illustrated in the screen shot below:

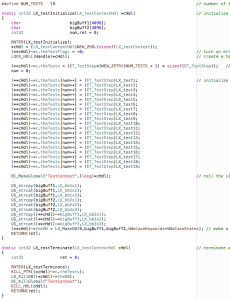

The screen shot to the right illustrates the code necessary to register the various test steps with the test suite so that it can call them. Note that LX_testInitialize() defines a global using Mitopia’s OC_MakeGlobal() function to reference the test context handle ‘cHdl‘. This makes the test context available to other code and threads throughout the system. In particular, should any test step cause an error report (in this thread or any other), the error logging facility will automatically insert the error details into the current ‘achieved’ string as a result of the module test suite registering a custom error handler for this purpose. This will thus make a test step that is not expecting the error fail.

The screen shot to the right illustrates the code necessary to register the various test steps with the test suite so that it can call them. Note that LX_testInitialize() defines a global using Mitopia’s OC_MakeGlobal() function to reference the test context handle ‘cHdl‘. This makes the test context available to other code and threads throughout the system. In particular, should any test step cause an error report (in this thread or any other), the error logging facility will automatically insert the error details into the current ‘achieved’ string as a result of the module test suite registering a custom error handler for this purpose. This will thus make a test step that is not expecting the error fail.

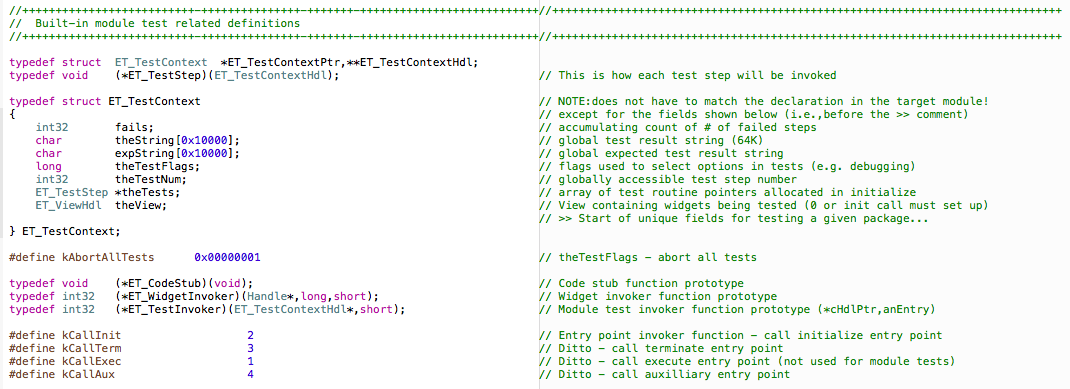

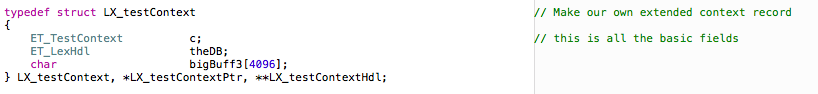

Every package defines its own unique module test context type (in this case LX_testContext) and these always start with the defined Mitopia® type ET_TestContext which is used by the module test suite to perform all its functions (see definitions below). In particular this structure is used to track the registered test steps and hold the expected and achieved strings for each step. Specific modules may add additional fields and structures to this generalized test context as required by their own module test code. In the case of the Lex test, it adds a lexical analyzer DB and a large buffer used internally by certain steps (see above).

Every package defines its own unique module test context type (in this case LX_testContext) and these always start with the defined Mitopia® type ET_TestContext which is used by the module test suite to perform all its functions (see definitions below). In particular this structure is used to track the registered test steps and hold the expected and achieved strings for each step. Specific modules may add additional fields and structures to this generalized test context as required by their own module test code. In the case of the Lex test, it adds a lexical analyzer DB and a large buffer used internally by certain steps (see above).

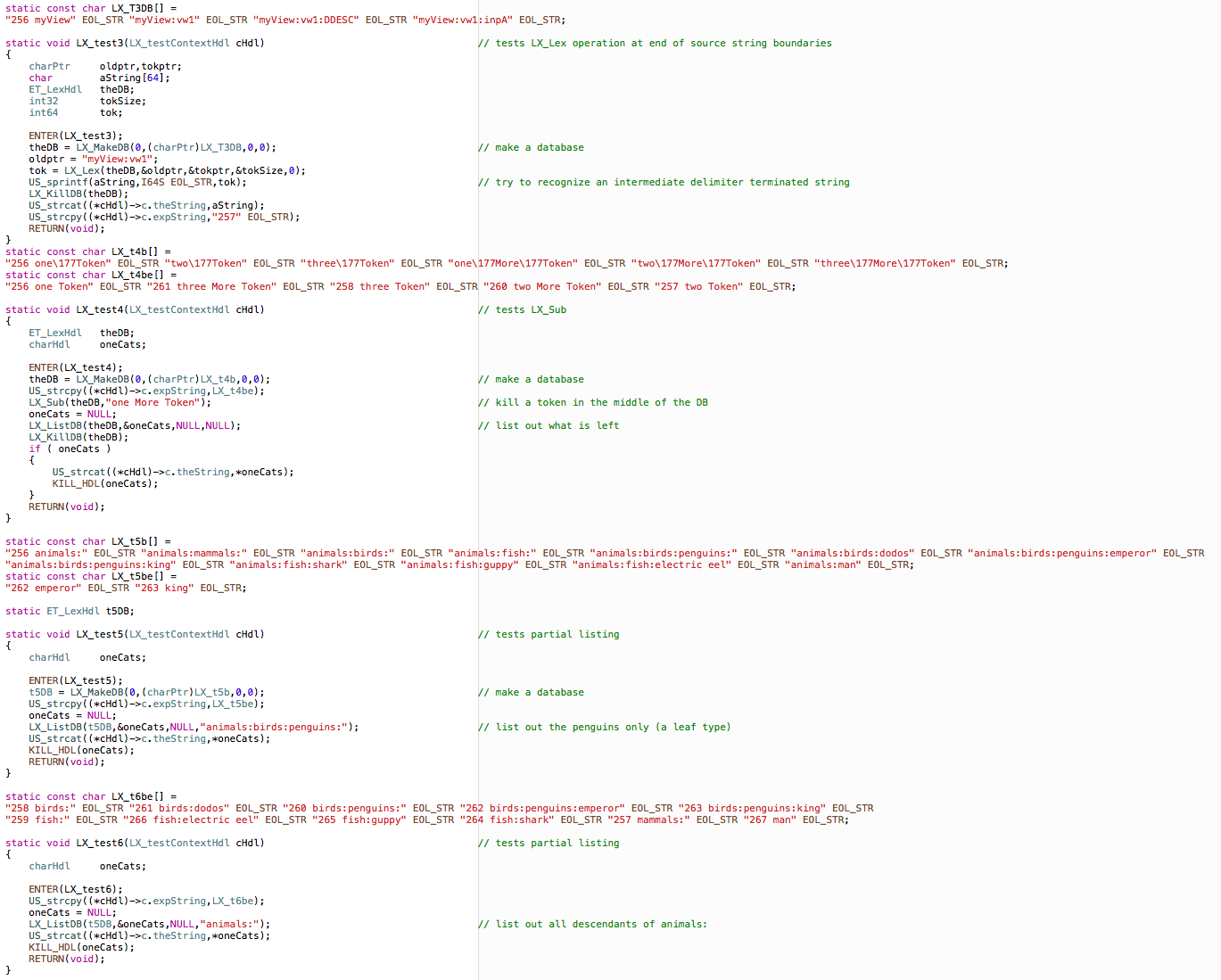

Given all these definitions, we can now look at some simple early test steps for the Lex package (remember tend to be like function unit tests):

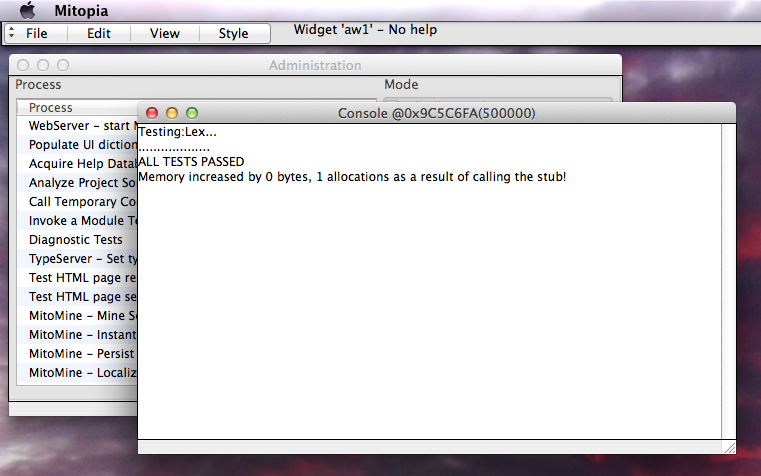

As you can see each step sets up the expected string by copying a constant into the ‘c.expString‘ field of the context, it then performs a sequence of actions using package functions in order to general a matching sequence by testing logical operation of the package. When the test is run, the framework invokes each step in sequence and compares expected and achieved strings when complete, outputting a single ‘.’ to the console for a successful test, or the mismatching test step and expected/achieved for a failure. It also monitors for memory leaks caused by the test. A successful run of the Lex module test therefore looks as follows:

Obviously, the test steps above are relatively simple. To illustrate more complex module level tests, the screen shot below shows the first step in the Parse package test which is in fact testing creation and operation of a full C expression parser by comparing its results to those obtained from the equivalent C program. This single test step tests a broad range of Parse.c functionality as well as correct operation of the underlying Lex.c package and many other features. In fact, module tests if correctly targeted at the uppermost functions (which in turn require lower level functions to operate correctly) can consist of a relatively small number of steps in order to provide broad coverage. Remember also, as the Parse example illustrates, that because Mitopia® code is so heavily layered on lower level abstractions, module tests for dependent abstractions (in this case Parse) further confirm correct operation of lower level abstractions (i.e., Lex) within the actual target environment. The full suite of registered module tests if run (and passed) thus provides a fairly high degree of confidence that any given change made during maintenance did not break something else. Indeed such tests in Mitopia® are also fundamentally a major part of integration testing since they all occur within the real application including of course access to persistent storage, GUI, and everything else. Some Mitopia® module tests further up the abstraction pyramid wipe the LOCAL server content (after a suitable warning to the user) and then mine and persist significant data sets, which they then use to test both client and server side operation.

As can be seen, for a relatively small one-time effort in creating and registering the test step, the developer (and maintainer) using the Mitopia® module test suite gains a permanent way of testing that all functionality in the module is working as intended, an easy way of stepping through code to understand it, and documentation of how package functions can be combined and invoked in order to accomplish complex things. Best of all, none of this is ever lost as developers leave or are changed. Mitopia’s built-in module test suite thus represents a key pillar making the code base resistant to ‘entropy’ and bugs caused by ill conceived maintenance actions on the code. As we all know, most bugs are introduced during maintenance, and most of the cost of a piece of software is actually incurred hunting these bugs during maintenance (some estimates go as high as 90%).

As can be seen, for a relatively small one-time effort in creating and registering the test step, the developer (and maintainer) using the Mitopia® module test suite gains a permanent way of testing that all functionality in the module is working as intended, an easy way of stepping through code to understand it, and documentation of how package functions can be combined and invoked in order to accomplish complex things. Best of all, none of this is ever lost as developers leave or are changed. Mitopia’s built-in module test suite thus represents a key pillar making the code base resistant to ‘entropy’ and bugs caused by ill conceived maintenance actions on the code. As we all know, most bugs are introduced during maintenance, and most of the cost of a piece of software is actually incurred hunting these bugs during maintenance (some estimates go as high as 90%).

Built-in module tests should therefore be a required feature, enforced by coding standards, and integrated into the actual product, in any software designed for longevity.