In this post I want to talk about how individual software programs ‘evolve’ over time and what determines their longevity, particularly with regard to the integration of commercial off the shelf software (COTS). The seminal work in this field was conducted by Meir Lehman at IBM in the 1970s. Studies have shown the average software program lifespan over the last 20 years to be around 6-8 years. Longevity increases somewhat for larger programs, so that for extremely large complex programs (i.e., over a million Lines of Code – LOC) the average climbs as high as 12-14 years. This increased longevity for large programs is related directly to the huge cost and inconvenience to an organization of replacing them. Nonetheless, 12-14 years is not very long when one considers the risk and the investment of time and money required to develop and maintain a 1M+ LOC program. Over the lifetime, such programs can easily cost $10’s or $100’s of millions.

In this post I want to talk about how individual software programs ‘evolve’ over time and what determines their longevity, particularly with regard to the integration of commercial off the shelf software (COTS). The seminal work in this field was conducted by Meir Lehman at IBM in the 1970s. Studies have shown the average software program lifespan over the last 20 years to be around 6-8 years. Longevity increases somewhat for larger programs, so that for extremely large complex programs (i.e., over a million Lines of Code – LOC) the average climbs as high as 12-14 years. This increased longevity for large programs is related directly to the huge cost and inconvenience to an organization of replacing them. Nonetheless, 12-14 years is not very long when one considers the risk and the investment of time and money required to develop and maintain a 1M+ LOC program. Over the lifetime, such programs can easily cost $10’s or $100’s of millions. For very small programs the average programmer can be expected to develop as much as 6,000 LOC/year, for very large ones the figure drops off drastically. During the maintenance phase (i.e., after the program is delivered), for large programs, productivity has been shown to be a tiny fraction of that achievable during development (as illustrated in the Function Point graph to the right). Mitopia® has built-in code analysis tools, used on a regular basis since 1993 to measure and analyze the code base. Historical results show average programmer productivity over the past 5 years to be over 30,000 LOC/yr. – off the chart even for a simple 10K LOC program according to COCOMO, and 3 times the maximum for initial development of 1M LOC programs, never mind maintenance. Just to be clear, the LOC measure has little to do with real functionality, indeed most Mitopia® customization now occurs through scripts and dynamically interpreted languages which have an expressivity orders of magnitudes higher than any compiled code we might discuss herein, and which MitoSystems does not track in our historical LOC data.

For very small programs the average programmer can be expected to develop as much as 6,000 LOC/year, for very large ones the figure drops off drastically. During the maintenance phase (i.e., after the program is delivered), for large programs, productivity has been shown to be a tiny fraction of that achievable during development (as illustrated in the Function Point graph to the right). Mitopia® has built-in code analysis tools, used on a regular basis since 1993 to measure and analyze the code base. Historical results show average programmer productivity over the past 5 years to be over 30,000 LOC/yr. – off the chart even for a simple 10K LOC program according to COCOMO, and 3 times the maximum for initial development of 1M LOC programs, never mind maintenance. Just to be clear, the LOC measure has little to do with real functionality, indeed most Mitopia® customization now occurs through scripts and dynamically interpreted languages which have an expressivity orders of magnitudes higher than any compiled code we might discuss herein, and which MitoSystems does not track in our historical LOC data. First let us eliminate from our considerations the ‘trivial’. The current life expectancy 6-8 years (perhaps half that for mobile apps), is made up almost exclusively of ‘small’ programs (less than 100K LOC) that build upon existing functional libraries to deliver a product that by its very nature has a short viability. The predominance of ‘tiny’ mobile apps in todays statistics is driving longevity figures down, and we cannot consider such simple systems or their market dynamics as relevant to our discussion of software survival and evolution into old age. Small programs are easy to replace or plagiarize; life in this realm is short and brutal – breed fast, die young. There is little need for a serious ‘maintenance’ phase in such software, the life-cycle is too short to bother; easier to replace the functionality entirely.

First let us eliminate from our considerations the ‘trivial’. The current life expectancy 6-8 years (perhaps half that for mobile apps), is made up almost exclusively of ‘small’ programs (less than 100K LOC) that build upon existing functional libraries to deliver a product that by its very nature has a short viability. The predominance of ‘tiny’ mobile apps in todays statistics is driving longevity figures down, and we cannot consider such simple systems or their market dynamics as relevant to our discussion of software survival and evolution into old age. Small programs are easy to replace or plagiarize; life in this realm is short and brutal – breed fast, die young. There is little need for a serious ‘maintenance’ phase in such software, the life-cycle is too short to bother; easier to replace the functionality entirely.By contrast, in larger programs it has been shown that over 90% of the cost and effort is involved in the maintenance phase, not in development as most people think. Big complex programs, rather than being built from the ground up (an intimidating and usually impractical strategy) tend to be assembled by ‘integrating’ a variety of building-block technologies or (commercial off the shelf software – COTS). This means that the developer is free to focus on the unique ‘glue’ that is the specific application program being developed, while ignoring the complexity of building complex underpinnings like a relational database, GUI frameworks, functional libraries, and the many other familiar subsystems that make up any large program. This COTS approach is favored by all the large systems integrators. Unfortunately even though the US government (and others) have favored this approach over proprietary development for decades, people now realize that it comes with a serious downside:

This then is the major effect that causes the untimely death of large software programs. I call it the ‘COTS cobbling’ problem, and have spent much time over the years trying to warn government agencies of the dangers – a futile effort of course (see first post on this site for an example). The dangers are not by any means limited to premature project death, they also include a functionality decline to the ‘lowest common denominator’ driven by COTS platform and compatibility constraints, not by actual needs. The ‘bermuda triangle‘ problem is perhaps the most common and obvious example of the broader COTS cobbling issue.

This then is the major effect that causes the untimely death of large software programs. I call it the ‘COTS cobbling’ problem, and have spent much time over the years trying to warn government agencies of the dangers – a futile effort of course (see first post on this site for an example). The dangers are not by any means limited to premature project death, they also include a functionality decline to the ‘lowest common denominator’ driven by COTS platform and compatibility constraints, not by actual needs. The ‘bermuda triangle‘ problem is perhaps the most common and obvious example of the broader COTS cobbling issue. |

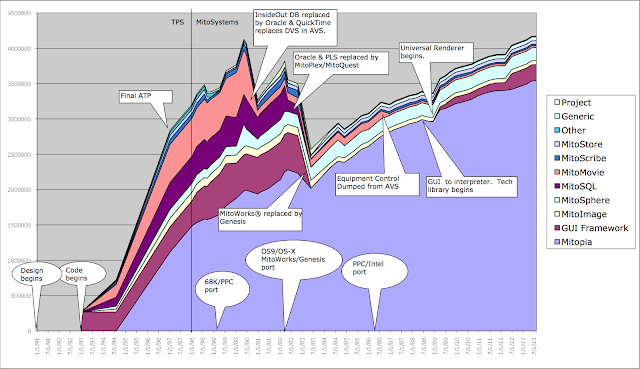

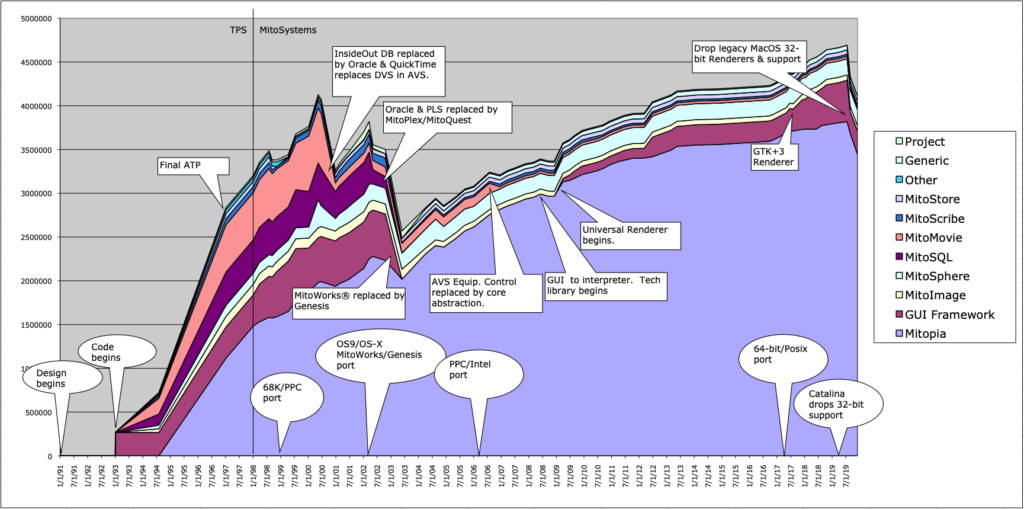

| Figure 2 -Evolution of the Mitopia® code base 1991-2013 (click to enlarge) |

The graph shows the total size of the code base over the 22 year period, broken down by the various subsystems that make up the entire Mitopia® environment. Various critical ‘change’ events are annotated on the diagram. Overall, a key thing to note is that excluding the early code mountain ranges, long since eroded, the rate of new code creation has remained roughly constant over the entire life-cycle (Lehman’s 4th law). This is despite the fact that during initial development there were approximately 20 times as many full-time developers working on the code as there are today. The fraction of the code base implemented as fundamental application-independent abstractions within core Mitopia® code (the purple area of the graph) has risen from an initial value of around 5%, to just under 50% when MitoSystems itself took over in 1998; it is around 85% today. In that same time frame, application-specific code (i.e., not abstracted and generalized) has dropped from 95% at the outset to just 0.3% percent today. This is a key indicator of a mature and adaptive ‘architecture’ rather than a domain-specific application. This universal use of, and familiarity with, a few very powerful abstractions to do virtually everything has been responsible for the constant increase in programmer productivity (up to 30K LOC/yr. today from just 7.5K LOC/yr. prior to ATP) – Lehman’s 5th law. This change has allowed software team size and costs to drop by such a large factor (20x) while total productivity remains unchanged or even increased.

Lehman’s “Second Law” of software evolution (compare with 2nd law of thermodynamics) states:

“The entropy of a system increases with time unless specific work is executed to maintain or reduce it.”

The gradual consumption of other subsystem code by the core abstractions within Mitopia® depicted in Figure 2 shows that MitoSystems approach to software evolution has always been driven largely by the desire to reduce software entropy. It is this fact more than any other that explains program longevity. Mitopia@ is what Lehman defines as an E-program, and as such is governed by all 8 of Lehman’s laws. Many of the other posts on this site address the core Mitopia® abstractions and how their expressive power allows broad applicability and removal of domain-specific code edifices.

At this point it must be said that the design documents produced by the end of the two year design phase that started in 1991, already specified many of the most basic abstractions in great detail, so one would expect that there would be little or no wasted or duplicative code developed in the other subsystems. It is the ultimate elimination of this waste/duplication that partially accounts for the jagged peaks that characterize the first half of the graph (i.e., the first decade of software life – by which time most programs have already died). The truth of the matter is that initial development of low level Mitopia® abstractions themselves took nearly four years to complete, and in the mean time other subsystem developers were forced to create alternate piecemeal solutions themselves, sometimes by incorporating COTS, in order to move their code bases forward. The pressure to deliver concrete functional capabilities to the customer, despite prior warnings of how long such a system development might take, had much to do with this early mountain formation. I myself got pulled into implementing the core abstractions despite my planned intent to stay out of the code entirely. This in turn caused a lack of competent technical supervision elsewhere during this period. As we all know programmers are infinitely harder to herd than cats, so the ultimate result was a COTS cobbling approach early on. It was never the plan, but it happened anyway. Mea somewhat culpa.

At this point it must be said that the design documents produced by the end of the two year design phase that started in 1991, already specified many of the most basic abstractions in great detail, so one would expect that there would be little or no wasted or duplicative code developed in the other subsystems. It is the ultimate elimination of this waste/duplication that partially accounts for the jagged peaks that characterize the first half of the graph (i.e., the first decade of software life – by which time most programs have already died). The truth of the matter is that initial development of low level Mitopia® abstractions themselves took nearly four years to complete, and in the mean time other subsystem developers were forced to create alternate piecemeal solutions themselves, sometimes by incorporating COTS, in order to move their code bases forward. The pressure to deliver concrete functional capabilities to the customer, despite prior warnings of how long such a system development might take, had much to do with this early mountain formation. I myself got pulled into implementing the core abstractions despite my planned intent to stay out of the code entirely. This in turn caused a lack of competent technical supervision elsewhere during this period. As we all know programmers are infinitely harder to herd than cats, so the ultimate result was a COTS cobbling approach early on. It was never the plan, but it happened anyway. Mea somewhat culpa.

As the chart shows, by around the year 2000, the code base had swollen so much it was larger even than it is today. Worse than that, because of the large diversity of approaches in the code, and particularly the large number of COTS and external libraries involved in the non-core subsystems, programmer time was almost entirely consumed by maintenance activity for the reasons already discussed. As a result, little or no actual forward progress in terms of non-core functionality was occurring. Meanwhile the core Mitopia® code which was (and remains) wholly independent of any other technology (except the underlying OS) continued to expand in capabilities. This dichotomy prompted a detailed examination of the underlying causes, and resulted in identifying ‘COTS cobbling’ as the fundamental issue and enemy of productivity over the long term.

In 2000 we decided we had to tackle this issue, since we could no longer tolerate the entropy and maintenance workload caused by so many diverse technologies. First to go was the custom database library (InsideOut) and wrappers used internally by the audio/video subsystem (pink area labelled MitoMovie™) to track external equipment and allocation for video capture and streaming. This was integrated into the Oracle database used elsewhere at the time. The continued development and maintenance of our own video CODEC, ATM network driver, and streaming technology (known as DVS) also no longer made sense, so we re-engineered to use QuickTime. Moreover, for a couple of years (starting in ’99) we had been forced to run in 68K emulation on Apple’s new PPC machines (there were heterogeneous installations at the time) because we used a purchased ‘stemming’ library that was implemented using the 68K processor; a PPC replacement was not on the cards. We were thus forced to develop our own replacement stemming technology. The first precipitous drop in code size caused by these changes can be seen in the chart during the year 2000.

In 2002, our ability to continue running under Mac OS-9 came to an end, as we knew it ultimately would. Apple had moved entirely to the OS-X architecture, and while the ‘Carbon’ APIs from OS-9 were still supported, three of the largest and most heavily used external COTS chunks were dead in the water with the change in OS. The Oracle database, while supported (somewhat) under OS-9, was at the time unsupported on OS-X with no plans to change this in the foreseeable future. The same was true for the Personal Librarian Software (PLS) we had incorporated into our database federation to handle inverted file text searching across languages. Equally disastrous was the fact that our internal MitoWorks™ GUI framework no longer made sense in an OS-X environment, and a replacement would be needed at the same time as all the other changes. 2002 constituted the single most disastrous illustration of the danger of COTS to life expectancy. If these components had only been our own code, all we’d have had to do would be re-compile them. It was a harsh but useful lesson.

For most projects, such a combination would have been instantly fatal, however, due to the unique nature of our primary customer, we were able to embark on a 1.5 year re-implementation of all three obsolete components which was responsible for the single largest drop in non-core code (see chart from 2002-2003). In this one transition we shed around a third of the entire code base while coming out of it at the end of the transition with enhanced capabilities, vastly improved performance, and a code structure now almost entirely implemented as core abstractions. We were now dependent on just 3 external COTS components (down from closer to 10 at the outset). The remaining dependencies being the underlying OS (now OS-X), the replacement GUI framework (known as Genesis), and a GIS library (which itself had replaced an earlier choice when the original vendor went out of business). Best of all, after the transition, programmer productivity increased dramatically and the time spent treading water in maintenance dropped proportionately, so allowing a subsequent up-tick in the rate of introducing new functionality into the architecture.

Throwing out the relational database and inverted text engine turned out to be one of the best things we ever did since it lead to the MitoPlex™ and MitoQuest™ core technologies, both built directly on the core Mitopia® extractions. The resulting code simplifications were only outweighed by the massive increases in performance and scaling we were able to realize at the same time. The same was true of switching to the new GUI platform which ultimately delivered improved visualizers, and enabled rapid development of the data-driven GUI approach to previously unthinkable levels. The combination made the architecture ‘adaptable’ to new domains in time frames that were fractions of those required earlier.

The whole experience, while traumatic at the time, made it clear that COTS cobbling is the enemy not only of productivity, but also adaptability, functionality, performance, generality, cost flexibility, and a whole host of other metrics that we had previously though to be immutable facts of software life. Not so, poor scores on these dimensions over time turn out to be largely driven by the compromises that COTS dictate. It became clear that we should not stop there, but should re-examine the three remaining COTS items to see if even they could be eliminated to achieve greater performance. COTS programs are S or P programs (in Lehman’s language), not E-programs. Depending on S or P programs will usually be the undoing of an E-program. I would propose a 9th law to add to Lehman’s which is as follows:

Proposed 9th Law: “Each significant external software component that an E-program relies on for internal functionality, will incrementally reduce that E-program’s life expectancy.”

In other words, its not just about how you structure and maintain your own code, longevity is also just as much about the number and type of the external technologies you rely on. External reliances introduce as much if not more entropy than sloppy maintenance changes ever could.

Figure 2 above from 2003 onwards shows a smooth process of increased programmer productivity, core abstraction functionality, and gradual independence from the remaining COTS components. Such independence remains our top technical goal. It took 20+ years of experience on a single code base to realize how critically important this is. Alas, few programmers ever work on one large code base for so long, mainly because few projects survive this long, which in turn is because the developers rarely get to see how damaging external COTS can be over a multi-decade software lifespan. It is a vicious cycle that few can even perceive, and which by its very scale prohibits serious academic investigation. Trust me though, it is real.

Over the years following 2003, we implemented our own ontology-based GIS subsystem entirely built upon core Mitopia® abstractions. One more COTS component bites the dust. Other minor dependencies have also been systematically eliminated. Starting in 2008, a new core-abstraction based universal renderer approach has incrementally replaced the use of the 3rd party Genesis GUI framework through a kind of background coding task when time permits. At present, both renderers remain simultaneously active within a single instance of Mitopia® (through the new GUI interpreter abstraction). Different widgets can pick different preferred renderers (there are now others) so that at this point of time Genesis is used only in a few places (mostly visualizers). Soon it too will be optional, and the last COTS dependency, other than the underlying OS, will be gone.

The OS will undoubtably be next as Apple’s tendency to deprecate C APIs in favor of Objective-C replacements becomes sufficiently irritating that it will ultimately force a full scale elimination of OS dependencies. MitoSystems sees Objective-C as just another ‘fad’ language (i.e., COTS) to be avoided, even if platform mandated.

The moral of the story? If you want your program to live to a ripe old age, don’t trust COTS…or is that cats, I can never be sure.

UPDATE Jan 2020: The diagram above shows the project history as of January 2020. The updated chart validates the predictions made in this original post on Aug 2013, namely that yet another OS transition, this time dumping support for 32-bit processes completely (in OS-X Catalina), forced a second major re-engineering effort 15 years after the first. This impending event triggered a porting project in 2017 to remove all dependencies on the underlying Mac-OS and re-implement the code to rely only on the Posix core libraries. The Posix core is available cross-platform including Mac-OS, Unix, Linux, and even Windows (with a bit of work). As with the previous OS-9 to OS-X transition, this one forced a move to a new universal renderer plugin (based on GTK+3) and elimination of all previous 32-bit renderers (including Genesis, Carbon, and others) and support. The impact on the code base size can be seen in the second cliff at the right of the graph as old/legacy code is dumped (see Lehman’s laws #2 and #7). The Mitopia® technology is now 32/64-bit agnostic and cross-platform with just a single dependency, namely the Posix core libraries (which are pervasive on virtually all platforms). A new ‘headless’ renderer plugin (using the terminal) now allows Mitopia® to run in embedded systems (and server blades) without GUI overhead.

It was a good thing we chose to completely ignore Objective-C and Apple’s Swift language which quite quickly replaced it – language fads as anticipated. If we had followed Apple’s language trends, a cross-platform port to Posix would have been extremely difficult, if not impossible. As it is, the Mitopia® code base is now almost 30 years old, and still evolving, relevant, and ground breaking, particularly in its emergent role as an Artificial General Intelligence (AGI) framework (see this post).