In a number of previous posts I have mentioned the decision cycle or OODA loop. Understanding the OODA loop is fundamental to creating an adaptable and continuously relevant knowledge system, in exactly the same way as it is to winning an aerial dogfight (the context in which Colonel John Boyd first conceived the theory). OODA loop theory plays a central role in the design and specification of the Mitopia® architecture, so before I can fully explore adaptability within Mitopia®, it is perhaps wise to discuss the decision cycle in more detail.

Colonel John Boyd (1927-1997) was a US Air Force fighter pilot whose theories have influenced many fields beyond just the military including sports, business, and competitive endeavors of all kinds.

Colonel John Boyd (1927-1997) was a US Air Force fighter pilot whose theories have influenced many fields beyond just the military including sports, business, and competitive endeavors of all kinds.

Boyd’s key concept was that of the decision cycle or OODA loop, the process by which an entity (either an individual or an organization) reacts to an event. According to this idea, the key to victory is to be able to create situations wherein one can make appropriate decisions more quickly than one’s opponent. The construct was originally a theory of achieving success in air-to-air combat, developed out of Boyd’s Energy-Maneuverability theory and his observations on air combat between MiG-15s and North American F-86 Sabres in Korea.

In evaluating the reasons behind the success rates experienced by US pilots in dogfights, despite the apparent fact that the MiG-15 is a more powerful and capable aircraft, Boyd identified and attributed two main factors that led to the F-86’s success. First, the F-86 fighter’s canopy was larger than that of the opposing MiG-15, providing a greater field of vision. Second, although the F-86 was larger and slower, it was more maneuverable (due to a higher roll-rate), allowing US pilots to make more frequent adjustments.

In evaluating the reasons behind the success rates experienced by US pilots in dogfights, despite the apparent fact that the MiG-15 is a more powerful and capable aircraft, Boyd identified and attributed two main factors that led to the F-86’s success. First, the F-86 fighter’s canopy was larger than that of the opposing MiG-15, providing a greater field of vision. Second, although the F-86 was larger and slower, it was more maneuverable (due to a higher roll-rate), allowing US pilots to make more frequent adjustments.

US pilots were thus able to observe their surroundings and detect changing circumstances faster than the MiG-15 pilots, and, because of the greater maneuverability, they were then able to change the situation faster to their advantage than were their opponents.

US pilots were thus able to observe their surroundings and detect changing circumstances faster than the MiG-15 pilots, and, because of the greater maneuverability, they were then able to change the situation faster to their advantage than were their opponents.

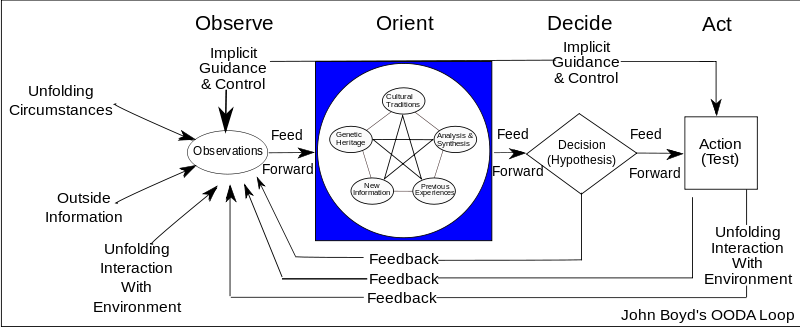

Boyd formalized the competitive process into the four steps ‘Observe’, ‘Orient’, ‘Decide’, ‘Act’ repeated in a loop so as to adapt to evolving circumstances. The pilot who goes through the OODA cycle in the shortest time prevails because his opponent is caught responding to situations that have already changed. The complete OODA loop process is shown above.

Boyd subsequently hypothesized that all intelligent organisms and organizations undergo a continuous cycle of interaction with their environment. Boyd breaks this cycle down to four interrelated and overlapping processes through which one cycles continuously:

|

| The basic OODA loop |

Observation: the collection of data by means of the senses

Orientation: the analysis and synthesis of data to form one’s current mental perspective

Decision: the determination of a course of action based on one’s current mental perspective

Action: the physical playing-out of decisions

Of course, while this is taking place, the situation may be changing. It is sometimes necessary to cancel a planned action in order to meet the changes. This decision cycle is thus known as the OODA loop. Boyd emphasized that this decision cycle is the central mechanism enabling adaptation (apart from natural selection) and is therefore critical to survival.

Boyd theorized that large organizations such as corporations, governments, or militaries possessed a hierarchy of OODA loops at tactical, grand-tactical (operational art), and strategic levels. In addition, he stated that most effective organizations have a highly decentralized chain of command that utilizes objective-driven orders, or directive control, rather than method-driven orders in order to harness the mental capacity and creative abilities of individual commanders at each level. In 2003, this power to the edge concept took the form of a DOD publication “Power to the Edge: Command … Control … in the Information Age” by Dr. David S. Alberts and Richard E. Hayes. Boyd argued that such a structure creates a flexible “organic whole” that is quicker to adapt to rapidly changing situations. He noted, however, that any such highly decentralized organization would necessitate a high degree of mutual trust and a common outlook that came from prior shared experiences. Headquarters needs to know that the troops are perfectly capable of forming a good plan for taking a specific objective, and the troops need to know that Headquarters does not direct them to achieve certain objectives without good reason.

OODA loop theory became the basis of air force fighter tactics, and has been fully adopted and formalized by the US Marines and other special forces units. Boyd’s insights also resulted in major changes to the design of US military aircraft and he was largely responsible for the development of the F-16. In other areas such as business, the cycle has been adapted to assist in competitive business decision making and planning. Regrettably however understanding of the OODA loop is patchy at best, and in the software and engineering realm it is virtually non-existent. I discussed this problem in detail as it applies to US intelligence agencies in my first post on this site (see here).

OODA loop theory became the basis of air force fighter tactics, and has been fully adopted and formalized by the US Marines and other special forces units. Boyd’s insights also resulted in major changes to the design of US military aircraft and he was largely responsible for the development of the F-16. In other areas such as business, the cycle has been adapted to assist in competitive business decision making and planning. Regrettably however understanding of the OODA loop is patchy at best, and in the software and engineering realm it is virtually non-existent. I discussed this problem in detail as it applies to US intelligence agencies in my first post on this site (see here).

In an earlier posts, I have discussed in detail the quite distinct concept of the Knowledge Pyramid, and how it impacts the kinds of problems that can be successfully tackled by any given software system (see here). During the long specification and development of Mitopia®, and the study of the shortcomings and demise of other attempts at complex systems, it became clear that there are two consistent and pervasive causes for failures, neither of which has been clearly identified by others who invariably blame ‘poor management’ for massive software failures. I would contend that blaming the managers is a cop out and fails to address the fundamental problems.

The first root problem is a lack of adaptability. Basically due to a complete failure to consider OODA loop theory in software specification and design, but I have also discussed the nature of the subordinate software methodology contributions to this problem in an earlier post entitled ‘The Bermuda Triangle‘.

The second root cause is a failure to understand the ramifications of the knowledge pyramid on the approaches taken, and the data substrates used in order to implement a complex system. Once again I have discussed this in detail in an earlier “Ziggurats but not Pyramids” post.

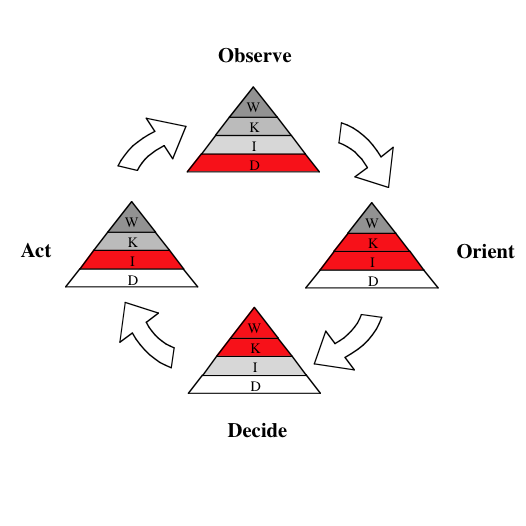

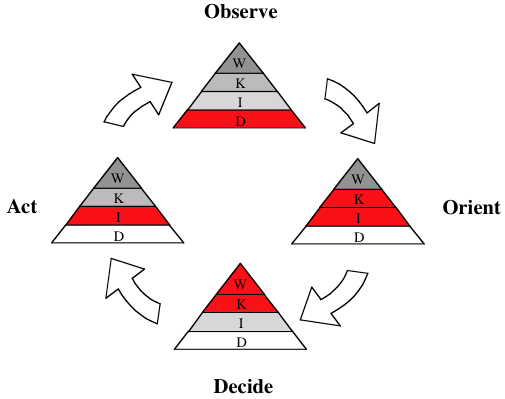

These two realizations taken together led to the creation of the crucial diagram below that I believe is the key to understanding past failures in complex software systems, just as Boyd’s basic OODA loop can be used to understand military failures:

|

| The Knowledge Pyramid decision cycle (or KP-OODA) loop |

The diagram illustrates an Organization’s OODA loop in terms of the levels of the knowledge pyramid that are required to facilitate each step in the cycle. As can be seen, to close the cycle requires knowledge level activities in the ‘orient’ and ‘decide’ steps, and wisdom level (i.e., human in the loop) at the ‘decide’ step. Since we cannot currently create wisdom level software systems, all we can hope to do is provide extensive tools at this step to facilitate human decisions, while automating to the maximum degree possible the ‘orient’ step. To ‘orient’ generally involves integrating massive amounts of disparate data, and hence without automating this step within a system, it will be difficult if not impossible for human beings to keep up with ongoing events, thus making the remaining steps of the cycle moot. Our software systems must recognize that this is what is really going on in the organization at every level, and this is what the system must be fundamentally designed to support.

Current generation information systems and design techniques fail to adequately address even the integration step required to ‘orient’, and thus in reality they cannot keep up with evolving events, and so they are at best retroactive tools to explain what went wrong (or for the less scrupulous to give compelling demos suggesting your ‘widget’ might have seen it coming). Failure to understand and face up to these truths is I believe the fundamental reason why so many complex software system developments tend to fail, or at best fall rapidly into obsolescence.

Internalizing the meaning of the diagram above and applying the resultant understanding at each and every stage of the software life cycle is the key to overcoming current limitations. I will discuss this in more detail in the next post.