Real world problems make computer chess look easy…

The state of the union

In an earlier post (here) lamenting blind unthinking use of Object Oriented Programming and espousing OOP alternatives, I made the following statement: “What in my opinion is the greatest feature …

Database is a dirty word

Today’s databases drastically limit what problems we can tackle…

A new approach to Ontology

Ontology should be based on science, not on philosophy…

Contiguous/Disjoint Ontologies

Ontology isn’t an add on, its the only way to access data…

An Ontology of Everything?

An impossible pipe dream right? Maybe not…

Taxis won’t get you there

Taxonomic thinking prevents ‘real’ data integration and limits insights…

The Rest is all Semantics

Much of this post and the illustrations within are taken from a Semantic Web lecture by John Davies, BT. The motivation behind the semantic web, for which OWL is the …

Don’t Point, it’s Rued

Pointers ultimately cause schisms and should be outlawed…

Going with the Flow

Implementing scaleable data-flow based systems…

The Bermuda Triangle

The software one, not the one in the Western Atlantic…

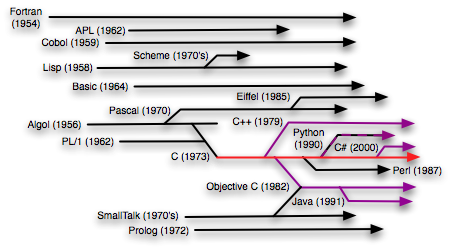

Abolishing the Class System

Throughout the Mitopia® development, the question of why don’t we use C++ (or more recently Objective-C) instead of straight C has emerged again and again, particularly among younger members of …

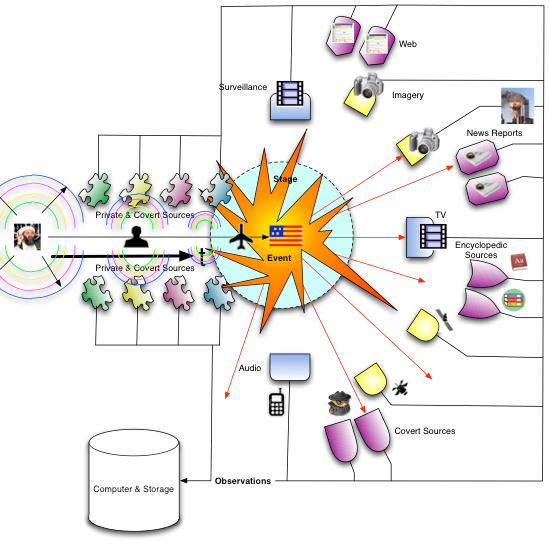

Drinking From a Fire Hydrant

This post on information overload is part 3 of a sequence. To get to the start click here. More and more our lives and interactions occur in the digital world, not …

Ziggurats but not Pyramids

Truncated [knowledge] pyramids are great for human sacrifices…

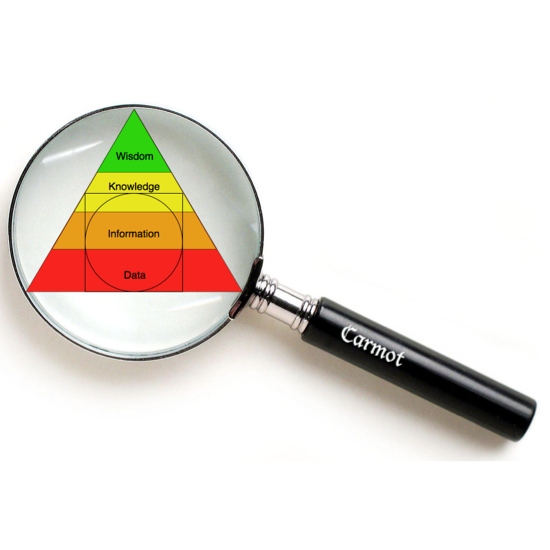

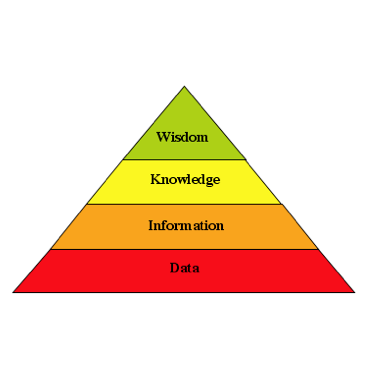

The Knowledge Pyramid

Before future posts can discuss true ontologies and how they fit into the range of computing systems, and most particularly how ontology-based systems differ fundamentally from todays taxonomy-level systems, we …